How A/B Testing Transforms Your SEM Campaigns

In the rapidly evolving world of digital marketing, A/B Testing in SEM has emerged as one of the most reliable strategies to optimize paid campaigns. Businesses no longer rely solely on intuition or generic ad templates. Instead, they leverage to make data-driven decisions, improve click-through rates, and maximize conversions. Whether you’re running Google Ads for Ecommerce or experimenting with SEM with Social Media Ads, understanding the nuances of A/B testing in SEM can be a game-changer for your advertising strategy.

Understanding A/B Testing in SEM

At its core, A/B Testing in SEM involves comparing two or more versions of an ad to determine which one performs better. This could involve changing headlines, ad copy, call-to-action buttons, or even targeting parameters. The process allows marketers to scientifically measure engagement, clicks, and conversions, eliminating guesswork.

One significant advantage of A/B testing in SEM is its versatility. For instance, integrating AI-Powered Bidding Strategies can further enhance test results by automatically adjusting bids based on user behavior and historical performance. By combining smart bidding with A/B experiments, businesses can maximize ROI while continuously improving ad relevance.

The Psychology Behind Effective Ads

Human behavior plays a crucial role in ad performance. When performing A/B testing in SEM, understanding the target audience’s psychology is essential. People are naturally drawn to ads that resonate with their interests, evoke emotions, or provide clear solutions. For example, leveraging insights from Meta Ads Strategy campaigns can reveal patterns in how audiences respond to different messaging styles. These insights can then be applied to A/B testing in SEM, refining ad creatives for maximum engagement.

Planning Your A/B Tests

A structured approach to A/B testing in SEM ensures meaningful results. Start by defining your objective whether it’s increasing CTR, improving conversion rate, or testing ad extensions. Next, identify the variables to test. Common variables include:

- Headlines

- Descriptions

- Display URLs

- Call-to-action buttons

- Visual elements

Marketers running Chatbot Marketing Analytics can gain additional insights from user interactions, such as response times or drop-off points, to fine-tune ad messaging. These data points, when integrated into A/B testing in SEM, help create a more targeted and effective advertising strategy.

Key Metrics to Track in A/B Testing in SEM

When performing A/B Testing in SEM, tracking the right metrics is critical. Not all data points contribute equally to understanding ad performance. The most important metrics include:

-

Click-Through Rate (CTR): Measures how compelling your ad is to searchers. A higher CTR indicates that your ad resonates well with your target audience.

-

Conversion Rate: Indicates the percentage of visitors who complete a desired action, such as purchasing a product or signing up for a newsletter. Integrating Google Ads for Ecommerce analytics can reveal conversion patterns across different product categories.

-

Quality Score: Google assigns this score based on relevance, CTR, and landing page experience. Continuous A/B testing in SEM can improve this score by refining ad copy and targeting.

-

Cost Per Click (CPC): Efficient bidding strategies, including AI-Powered Bidding Strategies, can lower CPC while maintaining ad effectiveness.

-

Engagement Metrics: If using SEM with Social Media Ads, metrics like likes, shares, and comments can offer insight into ad performance beyond simple clicks.

By tracking these metrics, marketers gain actionable insights that drive data-driven decisions in A/B testing in SEM, ensuring campaigns continuously improve over time.

Implementing A/B Tests: Step by Step

Executing A/B Testing in SEM requires a systematic approach. A clear framework ensures results are reliable and actionable:

Define Your Hypothesis: Start with a specific question, such as “Will changing the headline improve CTR?”

Select Variables to Test: Identify the elements of your ad to modify, like ad copy, images, or call-to-action buttons. For campaigns leveraging Meta Ads Strategy, testing different ad formats can reveal audience preferences.

Split Your Audience: Divide your target audience randomly to ensure unbiased results. For Chatbot Marketing Analytics, this can include testing different conversation flows linked to ad campaigns.

Run the Experiment: Launch both versions of the ad simultaneously. Monitor metrics over a statistically significant period to avoid misleading conclusions.

Analyze Results: Compare performance metrics. A/B test results should guide which ad version is most effective. Combining this with AI-Powered Object Recognition insights can help refine creative assets for better audience engagement.

Implement the Winning Variant: Once a winner emerges, apply the insights across campaigns and plan the next test cycle.

Common Pitfalls in A/B Testing in SEM

Even seasoned marketers can make mistakes when conducting A/B testing in SEM. Awareness of these pitfalls ensures more accurate and actionable results:

-

Testing Too Many Variables at Once: Changing multiple elements simultaneously can obscure which factor caused performance changes.

-

Insufficient Sample Size: Running tests with too few impressions leads to inconclusive results.

-

Ignoring Seasonal Trends: Audience behavior may fluctuate during holidays or events, which can skew A/B test results.

-

Overlooking Landing Page Experience: Even high-performing ads can underperform if the landing page is slow or confusing.

-

Neglecting Cross-Channel Insights: Integrating data from SEM with Social Media Ads campaigns or Google Ads for Ecommerce can offer a broader understanding of user behavior.

By avoiding these common mistakes, A/B testing in SEM can produce reliable, actionable insights that drive meaningful improvements in ad performance.

Advanced Strategies for A/B Testing in SEM

For marketers seeking a competitive edge, advanced strategies can enhance A/B testing in SEM outcomes:

AI-Powered Optimization

Using AI-Powered Bidding Strategies allows automatic bid adjustments based on real-time user behavior. AI can also help predict which ad variations are likely to perform best before running the test, saving time and budget.

Cross-Platform Testing

Integrating SEM with Social Media Ads provides a holistic view of ad performance. For example, if a headline performs well on Google Ads, testing it across Facebook or Instagram can validate whether the message resonates across channels.

Visual Recognition Tools

Incorporating AI-Powered Object Recognition enables marketers to identify which visual elements attract attention. This insight can guide the design of more effective ad creatives during A/B testing in SEM.

Analytics-Driven Iterations

Using Chatbot Marketing Analytics, marketers can track user engagement beyond clicks. Insights from chat interactions, drop-off points, and response patterns can refine ad copy and targeting.

Choosing the Right Audience for A/B Testing in SEM

Audience selection is a critical step in A/B Testing in SEM. Even the most optimized ad may fail if it reaches the wrong people. For effective targeting:

-

Demographics & Location: Adjust campaigns based on age, gender, income, or region. For instance, Google Ads for Ecommerce campaigns often perform better when segmented by location, as purchasing behavior varies geographically.

-

Device Targeting: Mobile and desktop users interact differently with ads. Running separate A/B testing in SEM experiments for devices can reveal which messaging works best per platform.

-

Behavioral Insights: Data from SEM with Social Media Ads helps identify users who are more likely to convert, allowing smarter test targeting.

-

Custom & Lookalike Audiences: Using past converters as a model can improve test accuracy. Integrating AI-Powered Bidding Strategies ensures these high-value audiences get priority placements.

Crafting High-Converting Ad Copies

The heart of A/B testing in SEM lies in the ad copy itself. Subtle variations can drastically impact CTR and conversion rates. Key strategies include:

-

Action-Oriented Headlines: Use clear, urgent language. A headline tested in an A/B Testing in SEM experiment could read differently for mobile versus desktop audiences.

-

Benefit-Focused Descriptions: Highlight what users gain. For Meta Ads Strategy, benefits are often tied to problem-solving, which resonates strongly with audiences.

-

Call-to-Action Variations: Test phrases like “Buy Now,” “Learn More,” or “Get Started” in your A/B Testing in SEM campaigns. Subtle differences can shift engagement patterns.

-

Emotional Triggers: Incorporate psychology—fear of missing out, curiosity, or exclusivity can be tested during A/B testing in SEM to see which drives higher engagement.

-

Integrating Visual Elements: When visuals are part of the ad, leveraging AI-Powered Object Recognition can determine which images or icons attract attention most effectively.

Using Landing Pages in A/B Testing in SEM

Even the best ad cannot convert without an optimized landing page. A/B testing should extend to landing pages:

-

Headlines & Copy: Does the landing page reinforce the ad’s promise? Testing alternative headlines alongside A/B testing in SEM can reveal mismatches.

-

Forms & CTA Buttons: Test placement, length, and colors. Using Chatbot Marketing Analytics, you can monitor drop-off points and user frustration.

-

Page Load Speed: Faster pages retain users better. Slow pages undermine A/B testing in SEM results.

-

Mobile Optimization: More than 60% of searches are on mobile. Mobile-friendly layouts can change which ad variant performs best.

Real-World Examples of A/B Testing in SEM

To illustrate, here are a few examples of A/B Testing in SEM in action:

| Campaign Type | Test Variable | Result |

|---|---|---|

| Google Ads for Ecommerce | Headline text | CTR increased by 18% |

| SEM with Social Media Ads | Image selection | Conversion rate improved by 12% |

| Meta Ads Strategy | CTA button color | Engagement rose 9% |

| AI-Powered Bidding Strategies | Bid adjustment timing | CPC decreased by 15% |

| Chatbot Marketing Analytics | Response flow | Leads doubled |

| AI-Powered Object Recognition | Product image variations | CTR improved 20% |

These examples show how A/B testing in SEM integrates with modern marketing tools to maximize results.

Advanced Segmentation Techniques

Segmentation improves testing precision in A/B Testing in SEM:

-

Behavioral Segmentation: Users grouped by past interactions with your site or ads.

-

Interest-Based Segmentation: Identified through SEM with Social Media Ads data.

-

Predictive Segmentation: AI tools predict which users are most likely to convert using AI-Powered Bidding Strategies.

-

Custom Event Tracking: Using Chatbot Marketing Analytics, track events like form submissions or downloads to refine segmentation.

Segmented testing ensures each variant reaches the audience most likely to react differently, improving statistical validity.

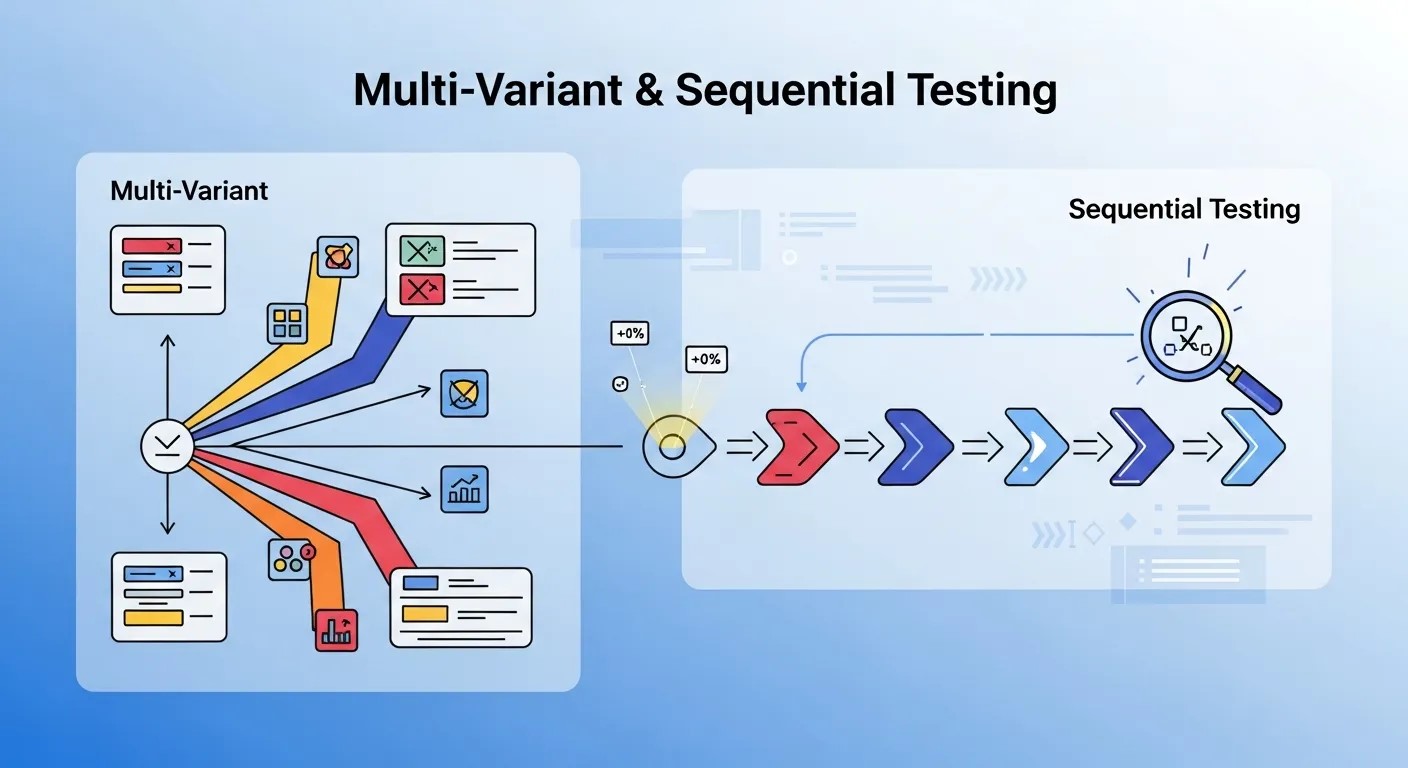

Multi-Variant & Sequential Testing

Beyond traditional A/B testing, marketers use advanced methods:

-

Multivariate Testing (MVT): Tests multiple elements simultaneously, e.g., headline + CTA + image.

-

Sequential Testing: Introduces new variants gradually, ideal for campaigns with long-term data collection.

Combining A/B testing in SEM with AI-Powered Object Recognition and Chatbot Marketing Analytics ensures multi-dimensional insights without overwhelming human analysis.

Deep Dive: Why A/B Testing in SEM Matters for Modern Marketers

In today’s digital landscape, relying solely on intuition or creative instincts no longer guarantees success. Businesses invest thousands in advertising campaigns across multiple channels, from search engines to social media platforms. A/B Testing in SEM empowers marketers to move beyond assumptions, providing a scientific framework to understand audience behavior. By systematically comparing ad variations, marketers can quantify the impact of small changes on click-through rates, conversion rates, and overall ROI.

For example, Google Ads for Ecommerce campaigns often involve multiple product categories. A headline that works for fashion products might underperform for electronics. Through A/B Testing in SEM, marketers can identify these nuances and tailor messaging to different segments, ultimately saving budget while increasing sales.

Moreover, modern tools like AI-Powered Bidding Strategies allow automated adjustments based on live performance data. When combined with A/B tests, these strategies ensure that the highest-performing ad variations receive the most exposure. This synergy between human-led experimentation and AI-driven optimization represents the future of search engine marketing.

Integrating A/B Testing in SEM with Social Media Campaigns

While traditional SEM focuses on search engine ads, integrating SEM with Social Media Ads creates a holistic marketing approach. Social media platforms provide unique behavioral insights that can inform search campaigns. For instance, engagement metrics on Facebook or Instagram can highlight which ad creatives resonate with your audience. These insights, when applied to A/B Testing in SEM, help optimize headlines, descriptions, and even images for search campaigns.

Marketers who experiment across both channels can identify patterns that single-channel testing might miss. A headline that drives clicks on social media may perform differently in search, revealing subtleties in user intent and engagement. This cross-channel perspective maximizes ROI by aligning messaging with platform-specific behavior.

Leveraging AI for Smarter A/B Tests

Artificial intelligence has transformed A/B Testing in SEM from a manual process to a data-driven, predictive practice. AI-Powered Bidding Strategies automatically adjust bids for high-performing ad variants in real time, ensuring optimal exposure for ads most likely to convert.

Additionally, AI can help predict which variations are likely to succeed before tests run. For instance, machine learning models analyze historical campaign data to recommend headline adjustments, image swaps, or CTA modifications. Integrating these insights into A/B Testing in SEM reduces wasted impressions and accelerates campaign optimization.

AI-Powered Object Recognition adds another layer of precision. By analyzing visual elements in ads, AI identifies which images attract attention and engagement. This is especially useful for Google Ads for Ecommerce, where product images play a critical role in driving conversions. Combining visual insights with copy testing ensures a comprehensive optimization strategy.

Step-by-Step Framework for A/B Testing in SEM

To maximize results, marketers should adopt a structured framework for A/B Testing in SEM. The following steps illustrate a full-cycle approach:

-

Define Clear Objectives: Identify whether the goal is CTR, conversion rate, engagement, or ROI improvement.

-

Select Variables: Choose which elements to test—headlines, descriptions, images, CTAs, or targeting parameters.

-

Segment Your Audience: Divide users by demographics, devices, or behavior. Using Chatbot Marketing Analytics can further refine segmentation.

-

Set Sample Size and Duration: Ensure statistical significance. Short tests or small audiences may lead to unreliable results.

-

Launch and Monitor: Track performance metrics in real time. Tools integrated with SEM with Social Media Ads provide cross-channel visibility.

-

Analyze and Implement: Determine the winning variation and apply insights to all campaigns.

-

Iterate Continuously: Marketing is dynamic. Continuous A/B Testing in SEM ensures campaigns remain optimized over time.

Real-World Case Study: Ecommerce Success with A/B Testing in SEM

Consider a mid-size ecommerce company selling fashion and tech products. They implemented A/B Testing in SEM alongside Google Ads for Ecommerce. By testing two headline variations across desktop and mobile users, the team discovered that playful, curiosity-driven headlines worked best for fashion, while straightforward, benefit-focused headlines converted better for tech products.

After integrating AI-Powered Bidding Strategies, the company automatically increased bids for the top-performing ads during peak shopping hours. Additionally, Chatbot Marketing Analytics revealed friction points in the checkout process, allowing them to optimize landing pages. Within two months, conversion rates increased by 23%, and overall ad spend efficiency improved by 18%.

Conclusion

A/B Testing in SEM is a powerful tool that allows marketers to make data-driven decisions, optimize ad performance, and maximize ROI. By systematically testing headlines, ad copy, visuals, and calls-to-action, businesses gain actionable insights into audience behavior. Integrating AI-powered strategies, leveraging analytics from Chatbot Marketing Analytics, and combining insights from SEM with Social Media Ads ensures campaigns are smarter and more effective. Over time, these experiments not only improve conversions but also enhance overall marketing efficiency, helping businesses stay competitive and deliver better results across all digital channels.

Frequently Asked Questions (FAQ)

What is A/B Testing in SEM?

A/B Testing in SEM is a method of comparing two or more versions of a search engine ad to determine which one performs better. It helps marketers optimize headlines, ad copy, visuals, and CTAs to improve click-through rates and conversions.

Why is A/B Testing Important for SEM Campaigns?

A/B Testing in SEM eliminates guesswork by providing data-driven insights. It allows advertisers to understand audience behavior, refine messaging, reduce wasted ad spend, and improve overall campaign ROI.

How Do I Set Up an A/B Test in SEM?

To set up an A/B test:

-

Define a clear objective (CTR, conversions, engagement).

-

Identify the variable to test (headline, ad copy, CTA).

-

Split your audience randomly.

-

Run the ads simultaneously for a statistically significant period.

-

Analyze results and implement the winning variant.

How Long Should an A/B Test Run in SEM?

An A/B test should run long enough to achieve statistically significant results. Depending on traffic volume, this could range from a few days to several weeks. High-traffic campaigns may reach significance faster than low-traffic ones.

Can I Test Multiple Elements at Once?

Yes, using multivariate testing, you can test several elements simultaneously, such as headline, image, and CTA. However, simpler A/B tests often provide clearer insights for individual variables.

How Do AI Tools Enhance A/B Testing in SEM?

AI-powered tools can predict which ad variations are likely to perform best, automate bid adjustments, and analyze user interactions. Integrating AI-Powered Bidding Strategies and AI-Powered Object Recognition can significantly improve ad optimization.

Can Social Media Data Improve A/B Testing in SEM?

Absolutely. Insights from SEM with Social Media Ads campaigns can guide ad copy, visuals, and audience targeting, ensuring that search campaigns align with user preferences and behavior across platforms.

How Often Should I Run A/B Tests for SEM Campaigns?

A/B Testing in SEM should be an ongoing process. Continuous experimentation helps adapt to changing audience behavior, seasonal trends, and market dynamics, ensuring campaigns remain optimized over time.

Leave a Reply